In short

- Function calling allows language models to access real-time information and interact with external systems.

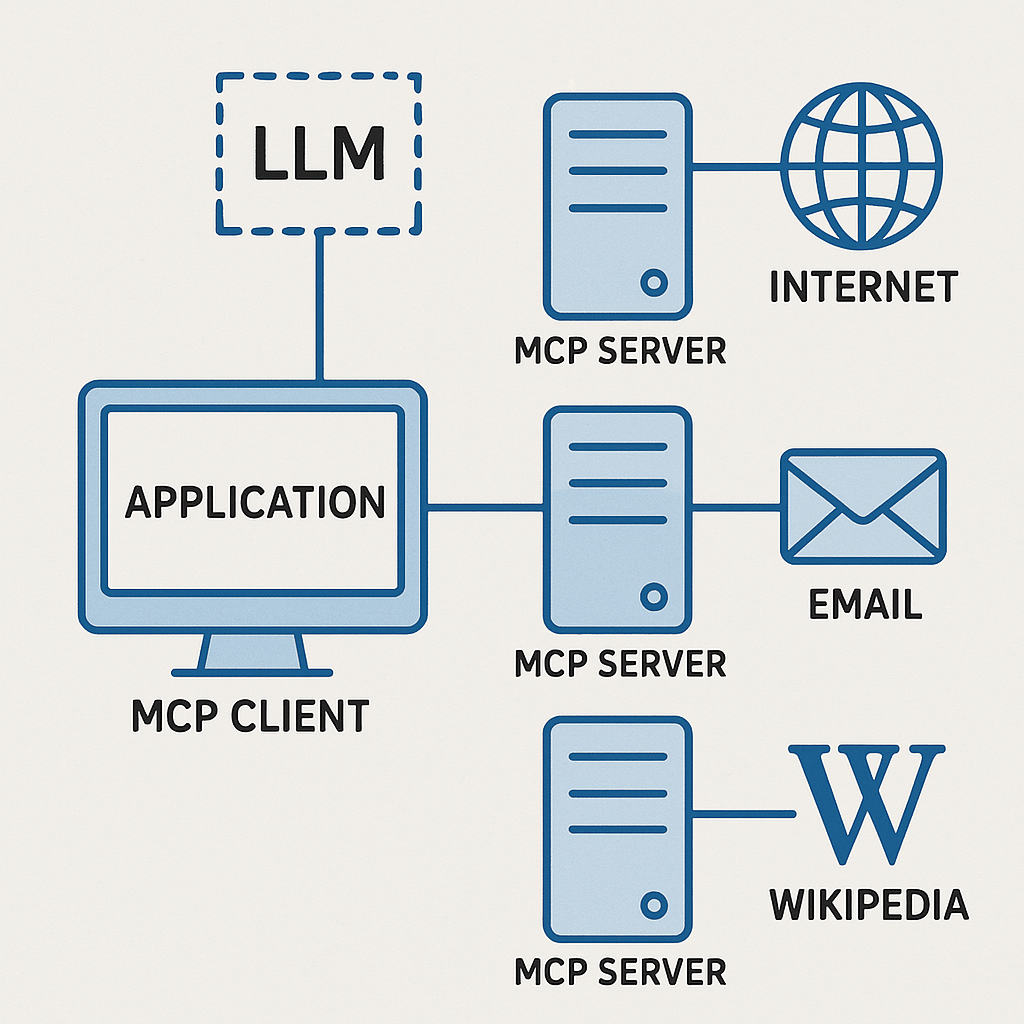

- The Model Context Protocol (MCP) standardizes communication between AI applications and language models, similar to how W3C standards unified web browsers.

- MCP consists of three components: client (integrated into host applications), server (implements prompts, resources, and tools), and transport layer (for communication).

- MCP uses JSON-RPC messages for calls between clients and servers.

- By reducing development redundancy and creating shared standards, MCP accelerates AI adoption and integration into applications.

Most language models (LLMs) have a knowledge cutoff of a few months ago and by default they can’t communicate with external systems. This means they can’t search the web, access documents, browse your file system, or read/send emails. Function calling provides the solution, allowing LLMs to (indirectly) connect with these external systems and expand their capabilities beyond their inherent knowledge. With function calling, you define functions with metadata and provide that to the LLM. The LLM will consider if these functions should be called and return that back to the application. The Model Context Protocol (MCP) is the bridge between an AI-powered application and a LLM to allow function calling. It is a contract or agreement on how the communication should be. It was developed by Anthropic and released last year. Many MCP servers have already been developed by the community, and it’s being supported by more companies. To understand the MCP protocol it’s important to first understand function calling.

What is function calling?

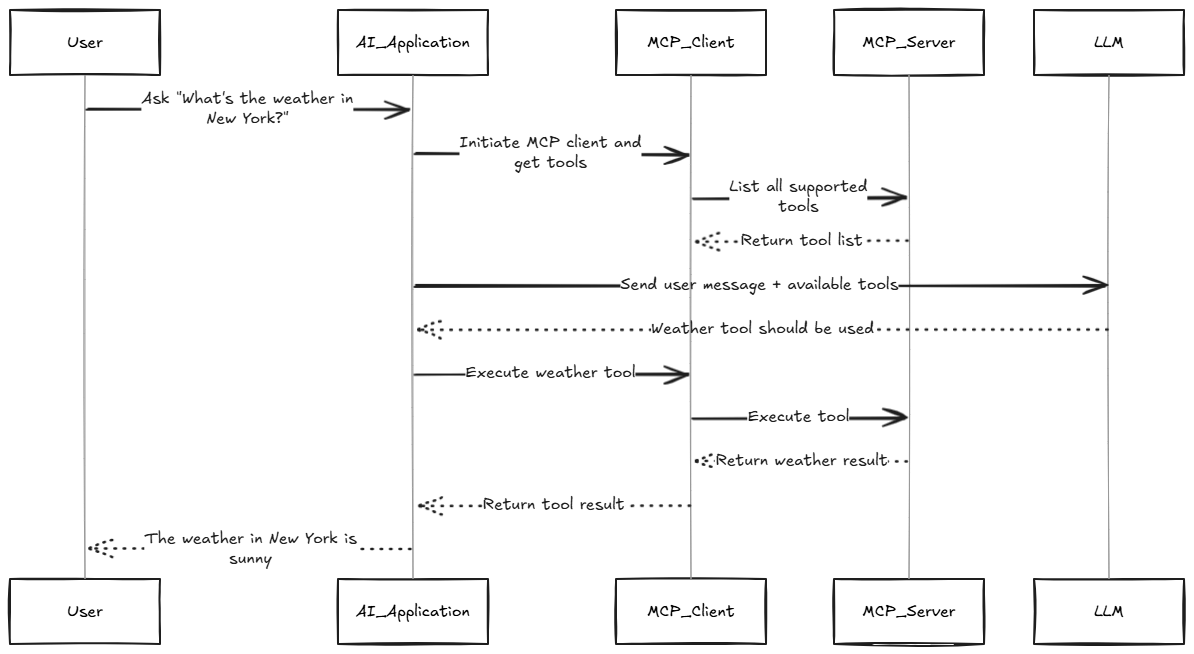

Let’s say that you are developing a chat application and users should ask what the weather is in a specific city. That is real-time information which the LLM can’t access by default. With function calling, you allow an LLM to talk indirectly with the ‘outside world’. More concretely, you provide an LLM with function definitions (name, description and parameters of the function) and the LLM will determine if a function should be called based on user input. The LLM returns the function definition which the application should call.

- User asks in the chat application what the current weather is in New York.

- Send a request to the LLM with a function definition: get_weather(city).

- LLM determines if it should call get_weather(‘New York’) and returns that to the application.

- The application identifies the function definition (get_weather(‘New York’)) in the response of the LLM and calls that function.

- The application presents the response of the function to the user.

Let’s look at this example from OpenAI. First, how the client (application) should provide the LLM with function definitions.

from openai import OpenAI

client = OpenAI()

tools = [{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get current temperature for a given location.",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "City and country e.g. Bogotá, Colombia"

}

},

"required": [

"location"

],

"additionalProperties": False

},

"strict": True

}

}]

completion = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": "What is the weather like in Paris today?"}],

tools=tools

)

print(completion.choices[0].message.tool_calls)Below you can see that the LLM determined that the weather function should be called and returns that to the client.

[{

"id": "call_12345xyz",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"Paris, France\"}"

}

}]In the last step, the application should actually call the function. Now, let’s move on with discussing why we need MCP.

Function calling already works so why do we need the Model Context Protocol?

In the previous example, you saw how OpenAI implemented function calling. Even though most companies follow the implementation of OpenAI, it’s not an open standard (protocol) that is implemented by everyone. That means that client applications should create different implementations for each LLM, or if you developed an API that you want to expose as a function, it must support all different LLMs. Of course, this causes a lot of compatibility problems. It’s a bit similar to how browsers operated before W3C standards and each browser rendered HTML/CSS differently. This gave interoperability problems for web developers where they had to make custom implementations for browsers.

Where W3C created a standard for browsers and HTML/CSS, the Model Context Protocol provides a standardized way of communication between client applications and LLMs. We can break down the Model Context Protocol into three components: client, server, and transport layer.

MCP Client

A MCP client is initialized in a host application. Example of a host applications are Claude Desktop, Cursor or any other custom application. A client creates connections to all configured MCP servers and can request prompts, resources or execute tools (functions). It’s up to the host application to decide when and how to display data that is returned by the MCP server.

MCP Server

A MCP server (usually) runs separately from the client. It can implement prompts, resources and tools.

| Prompts | Reusable prompt templates, with dynamic values, that clients can present to users or pass directly to LLMs. Example: Can you summarize this text: {text} |

| Resources | Return data to clients that can be used as context for a LLM. Compare this to a Web API GET endpoint. Example: A resource for getting all contacts from a database |

| Tools | Return function definitions back to the client. A LLM determines if a function should be called. Example: A tool for saving a contact in a database |

Transport layer

The transport layer facilitates the communication between client and server. Currently, there are two mechanism available, however, it’s possible to create a custom one.

Stdio transport

Stdio stands for standard input/output. It’s a way for an application to communicate with external systems and services. For Node.js, it uses the process.stdin and process.stdout objects:

process.stdin.on('data', (data) => {

process.stdout.write(`Echo: ${data}`);

});By using stdout you can write data from your application. And with stdin you can receive or read incoming messages. The command below returns: ‘Echo: Hello from a terminal’.

echo "Hello from a terminal!" | node demo.jsSo when you use Node.js and stdio as the transport layer, the client and server are using process.stdin and process.stdout to communicate.

HTTP with SSE transport

If a MCP server is hosted externally, then HTTP with SSE (server-sent events) transport can be used. When a client connects to a server, it executes a GET request with the header Accept: text/event-stream. The server will keep this request open and communicate back to the client over the open stream. See the first initial request below.

GET /events HTTP/1.1

Host: localhost:3000

Accept: text/event-stream

Connection: keep-aliveAfter this, the server is able to send data back to the client as long as the connection remains open.

HTTP/1.1 200 OK

Content-Type: text/event-stream

Cache-Control: no-cache

Connection: keep-alive

data: Hello 1

data: Hello 2

data: Hello 3The client and server communicate by exchanging JSON-RPC messages over the open connection.

JSON-RPC: Lightweight protocol for Remote function calls

JSON-RPC (Remote Procedure Call) is a protocol that allows clients to invoke methods on remote systems using JSON format. Because it uses standard JSON encoding, it works across any programming language.

How it works:

- The client sends a request to the server in JSON format.

- The server receives the JSON message and executes the specified function.

- The server returns the function response in JSON format back to the client.

- The client receives the JSON response and processes the results.

A client that instructs the server to execute the add function:

{

"jsonrpc": "2.0",

"method": "add",

"params": [10, 20],

"id": 1

}| jsonrpc | Protocol version. |

| method | Function that should be executed by the server. |

| params | Params passed to the function. |

| id | A unique identifier for the request. |

Server to client response:

{

"jsonrpc": "2.0",

"result": 30,

"id": 1

}| jsonrpc | Protocol version. |

| result | The result of the function. |

| id | Identifier that match with the request. |

Let’s take a look at an example of how an MCP client uses JSON-RPC to interact with an MCP server when executing a tool. First, the client establishes a connection to the server by sending an initial request. This connection remains open so that the server can send data back to the client.

GET /sse HTTP/1.1

Host: localhost:3001

Accept: text/event-stream

Accept-Encoding: gzip, deflate

Accept-Language: *

Cache-Control: no-cache

Connection: keep-alive

Pragma: no-cache

Sec-Fetch-Mode: cors

User-Agent: nodeThe client sends an HTTP POST request to invoke the “echo” tool with a message parameter.

POST /messages?sessionId=080ab0a9-3e3c-4da7-ae9c-3bb75d096d29 HTTP/1.1

Host: localhost:3001

Accept: */*

Accept-Encoding: gzip, deflate

Accept-Language: *

Connection: keep-alive

Content-Length: 109

Content-Type: application/json

Sec-Fetch-Mode: cors

User-Agent: node

{

"method": "tools/call",

"params": {

"name": "echo",

"arguments": {

"message": "hello world"

}

},

"jsonrpc": "2.0",

"id": 1

}The server processes the JSON-RPC request and executes the requested function. It then sends a message containing the function’s result back to the client over the open HTTP connection.

{

"content": [

{

"type": "text",

"text": "Tool echo: hello world"

}

]

}Node.js example: MCP client and server with HTTP SSE

A great start is to use the official typescript SDK that can be found here. Remember that an MCP client is not a chat application itself, but rather integrated into a chat application. First, ensure that you’ve installed the @modelcontextprotocol/sdk NPM package. In the example below, I’m using the SSEClientTransport for connecting to my MCP server which is running on localhost:3000.

import { Client } from "@modelcontextprotocol/sdk/client/index.js";

import { SSEClientTransport } from "@modelcontextprotocol/sdk/client/sse.js";

const transport = new SSEClientTransport(new URL("http://localhost:3001/sse"));

const client = new Client(

{

name: "client",

version: "1.0.0"

}

);

await client.connect(transport);After that, you can retrieve prompts, resources and execute functions that are running on your MCP server.

// List available tools

const prompts = await client.listPrompts();

console.log("Available prompts:", prompts);

//Get prompt

const prompt = await client.getPrompt({ name: "introduction-email", arguments: { name: "John Doe" } });

console.log("Result:", JSON.stringify(prompt));

// List resources

const resources = await client.listResources();

console.log("Available resources:", resources);

// Read resource

const resource = await client.readResource({ uri: "contacts://persons" });

console.log("Result:", resource);

// List available tools

const tools = await client.listTools();

console.log("Available tools:", tools);

// Call a tool

const result = await client.callTool({

name: "save-person",

arguments: {

name: "Charlie Brown",

age: 70,

function: "Manager",

keywords: ["manager", "leader"]

}

});

console.log("Result:", result);For the implementation of the MCP server you can use the McpServer class. The McpServer includes methods to specify prompts, resources and tools. In the example, you can see that I created a simple db variable that holds a list of person objects. This variable is used in both the resources and tool.

import { McpServer, ResourceTemplate } from "@modelcontextprotocol/sdk/server/mcp.js";

import { SSEServerTransport } from "@modelcontextprotocol/sdk/server/sse.js";

import express, { Request, Response } from "express";

import { z } from "zod";

const server = new McpServer({

name: "Contacts MCP Server",

version: "1.0.0"

});

const db = [

{ id: 1, name: "John Doe", age: 30, function: "Software Engineer", keywords: ["engineer", "software", "developer"] },

{ id: 2, name: "Jane Doe", age: 25, function: "Data Scientist", keywords: ["data", "scientist", "analytics"] },

{ id: 3, name: "Jim Doe", age: 35, function: "Product Manager", keywords: ["product", "manager", "lead"] },

]

server.prompt(

"introduction-email",

{ name: z.string() },

({ name }) => ({

messages: [{

role: "user",

content: {

type: "text",

text: `Write an introduction email to: ${name}`

}

}]

})

);

server.resource(

"contacts",

"contacts://persons",

async (uri) => ({

contents: [{

uri: uri.href,

text: db.map((item) => `${item.name}`).join(", ")

}]

})

)

server.tool(

"save-person",

{ name: z.string(), age: z.number(), function: z.string(), keywords: z.array(z.string()) },

async ({ name, age, function: func, keywords }) => {

db.push({ id: db.length + 1, name, age, function: func, keywords });

return {

content: [{ type: "text", text: `Person saved in the database` }]

};

}

);In order to accept new connections from clients and receive messages to process, two functions should be implemented in the MCP server.

| /see | This endpoint is called when a client connects to the MCP server. For each new connection, a SSEServerTransport is created that under the hood generates a unique sessionId. This is added to a list which is kept in memory. The sessionId is communicated back to the client. The request is kept open. |

| /messages | Each time a client requests a prompt, resource, or tool, this endpoint is called. In the query string of the request, the sessionId is defined. This allows the server to communicate back to the client using the open request. |

const app = express();

const transports: {[sessionId: string]: SSEServerTransport} = {};

app.get("/sse", async (_: Request, res: Response) => {

const transport = new SSEServerTransport('/messages', res);

transports[transport.sessionId] = transport;

res.on("close", () => {

delete transports[transport.sessionId];

});

await server.connect(transport);

});

app.post("/messages", async (req: Request, res: Response) => {

let body = await getRawBody(req, {

limit: "4mb",

encoding: "utf-8",

});

const sessionId = req.query.sessionId as string;

const transport = transports[sessionId];

if (transport) {

console.log(`Handling message for sessionId: ${sessionId} request body: ${body}`);

await transport.handlePostMessage(req, res, body);

} else {

res.status(400).send('No transport found for sessionId');

}

});

app.listen(3001);MCP is growing rapidly, with many developers and companies creating new servers. It provides a uniform solution for communication between AI applications and the outside world. By standardizing how LLMs interact with external tools and data sources, MCP reduces development redundancy and allows for innovative solutions.